ML Engine

From the training samples and the learning process, ML Engine builds an ML model. As mentioned earlier, Machine Learning Engine is concerned with intent detection and entity extraction.

- The intent prediction model is trained using statistical modeling and Neural networks. Intent classification tags the user utterance to a specific intent. The classification algorithm learns from the set of sample utterances that are labeled on how they should be interpreted. Training utterance preparation and curation is one of the most significant aspects of building a robust Machine learning model.

- Entity Detection involves recognizing System Entities (Out Of the Box, Rule-based model) predicting Custom Entities (Custom-trainable Rules-based Model), and Named Entity Recognition. System Entities are defined using built-in rules. However, using the NER approach, any named entity can be trained using Machine Learning by simply choosing the value from the ML training utterances and tagging them against the named entity.

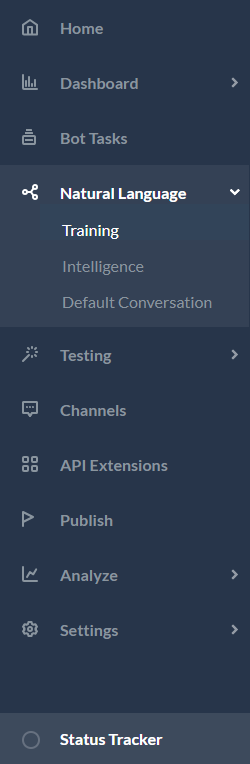

ML Training

Steps in training ML engine can be listed as follows:

- Choosing and gathering data that can be used as the training set

- Dividing the training set for evaluation and tuning (test and cross-validation sets)

- Training a few ML models according to algorithms (feed-forward neural networks, support vector machines, and so on) and hyperparameters (for example, the number of layers and the number of neurons in each layer for neural networks)

- Evaluating and tuning the model over test and cross-validation sets

- Choosing the best performing model and using it to solve the desired task

Tips for better ML training:

- Batch test suites compulsory, for comparing various ML models. Run the batch suite, configure the parameters, re-run the suite and compare the results.

- There is no set rule as to which ML model to go for. It is a trial and error method – configure the engine, run batch suites and compare results.

- If your data is huge then the stop words and synonyms are recognized automatically by the ML engine and taken care of without having to enable them explicitly.

- Check for influencer words and if needed add it as a stop word, for the n-gram algorithm.

- Prepare as diverse examples as possible.

- Avoid adding noise or pleasantries. If unavoidable, add noise so that it is equally represented across intents, else you can easily overfit noise to intents

Confusion Matrix can be used to identify training sentences that are borderline and fix them accordingly. Each dot in the matrix represents an utterance and can be individually edited.

The graph can further be studied for each of the following parameters:

- Get rid of false positives and false negatives by assigning the utterance to the correct intent. Click on the dot and on the edit utterance page assign the utterance to the correct intent.

- Cohesion can be defined as the similarity between each pair of intents. The higher the cohesion the better the intent training. Improve cohesion by adding synonyms or rephrasing the utterance.

- Distance between each pair of training phrases in the two intents. Larger the distance the better the prediction.

- Confusing phrases should be avoided i.e. phrases that are similar between intents.

K-fold model is ideal for large data but can be used for less data too with two-folds. Track and fine-tune the F1-score, Precision, and Recall as per your requirements. A higher value of recall score is recommended.

ML Process

Intent Detection

The below diagram summarizes the intent detection pipeline for both training and prediction stages. For the training pipeline, the language detection and auto-correction are not run with the assumption that the trainer would be aware of the language in which training needs to be done and of the spellings to be used which might include domain-specific non-dictionary words like Kore, etc.

Entity Extraction

Entity extraction involves identifying any information provided by the user apart from the intent that can be used in the intent fulfillment. The entities are of three types

- System entities like date, time, color, etc are provided out-of-the-box by the platform. It includes nearly 22-24 entities and these are recognized by the ML engine automatically with no training except for string & description entity types.

- Custom entities are defined by the bot developer and these include the list of values – enumerated, lookup, and remote, regex expressions, and composite entities. These are also mostly auto-detected by the ML engine.

- NER or named entity recognition needs the training to identify the same entity type for different entities e.g. source & destination cities for flight booking intent, both of which are city type entities and the engine needs the training to distinguish between the two. NER can be conditional random field-based or neural network-based. CRF is preferred since it works on lesser data and has a faster training time compared to the NN-based method.

The following diagram summarizes the NER entity extraction pipeline

ML Output

ML Engine runs the classification against the user utterance and generates the following scores output which Ranking and Resolver use for identifying the correct intent:

- The probability Score for each class/intent, can be interpreted as follows

- Definitive Match/Perfect Match: If the probability score >0.95 (default and adjustable)

- Possible match: If the score is <0.95%, it becomes eligible for ranking against other intents which may have been found by other engines.

- The fuzzy score for each of the classes/intents which are greater than the Threshold score(default is 0.3) – Fuzzy logic goes through each utterance of a given intent and compares it against the user input to see how close the user input and the utterance are. The scores are usually from 0-100 and can be interpreted as follows:

- Definite Match/Perfect Match: If the score is above 95%(default & adjustable)

- Possible match: If the score is <95%, becomes eligible for Ranking against other intents which may have been found by other engines.

- CR Sentences – The ML engine also sends the top 5 ML utterances for each of those Intents which have qualified using the Threshold score. These 5 ML utterances are derived using the Fuzzy score. Ranking & Resolver uses these CR sentences to Rescore and choose the best of these utterances (compares each utterance against user input and chooses an utterance with topmost score)

ML Engine Limitations

Though the ML model is very thorough and encompassing, it has its own limitations.

- In cases where sufficient training data is not available ML model tends to overfit small datasets and subsequently leading to poor generalization capability, which in turn leads to poor performance in production.

- Domain adaptation might be difficult if trained on datasets originating from some common domains like the internet or news articles.

- Controllability and interpretability are hard because, most of the time, they work like a black box, making it difficult to explain the results.

- Cost is high both in terms of resources and time

- The above two points also result in maintenance or problem resolution being expensive (again both in terms of time & effort) and can result in regression issues.

Hence ML engines augmented by FM engines would yield better results. One can train the bot with a basic ML model, and any minor issues can be addressed using FM patterns and negative patterns for idiomatic sentences, command-like utterances, and quick fixes.