ML Engine related

Split Compound Words

Compound words are formed when two or more words are joined together to create a new word that has an entirely new meaning. This is particularly the case with the German language, where two (or more) words are combined to form a compound, leading to an infinite amount of new compounds. For example, the components are connected with a transitional element, as the -er in Bilder | buch (picture book); or parts of the modifier can be deleted. For example, Kirch | turm (church tower), where the final -e of the lemma Kirche is deleted. Often the compound words mean something entirely different from the stem words. For example, Grunder (founder) with stem words grun | der (green|the). From an NLP perspective, it is important to understand when the NLP engines should split the words and process and when the entire word should be processed.

This setting is used to choose how the compound words should be processed. Once enabled, compound words present in the user utterance splits into their stem words and then considered for Intent Detection.

None Intent

The Machine Learning (ML) engine uses the training utterances to build a model to evaluate user utterances based on its training. The ML model tries to classify user input into one of these inputs. However, when there is an out of vocabulary word, ML tries to classify that too and this might hamper intent over an entity in some cases. For example, a person’s name at the entity node should not trigger any intent.

Adding an extra None Intent ensures classifying random input to these intents in the bot. Once enabled, the ML Model is tuned to identify these none intents when a user utterance contains the words that are not used in the bot’s training. i.e., bot vocabulary.

Externalization of ML Engine

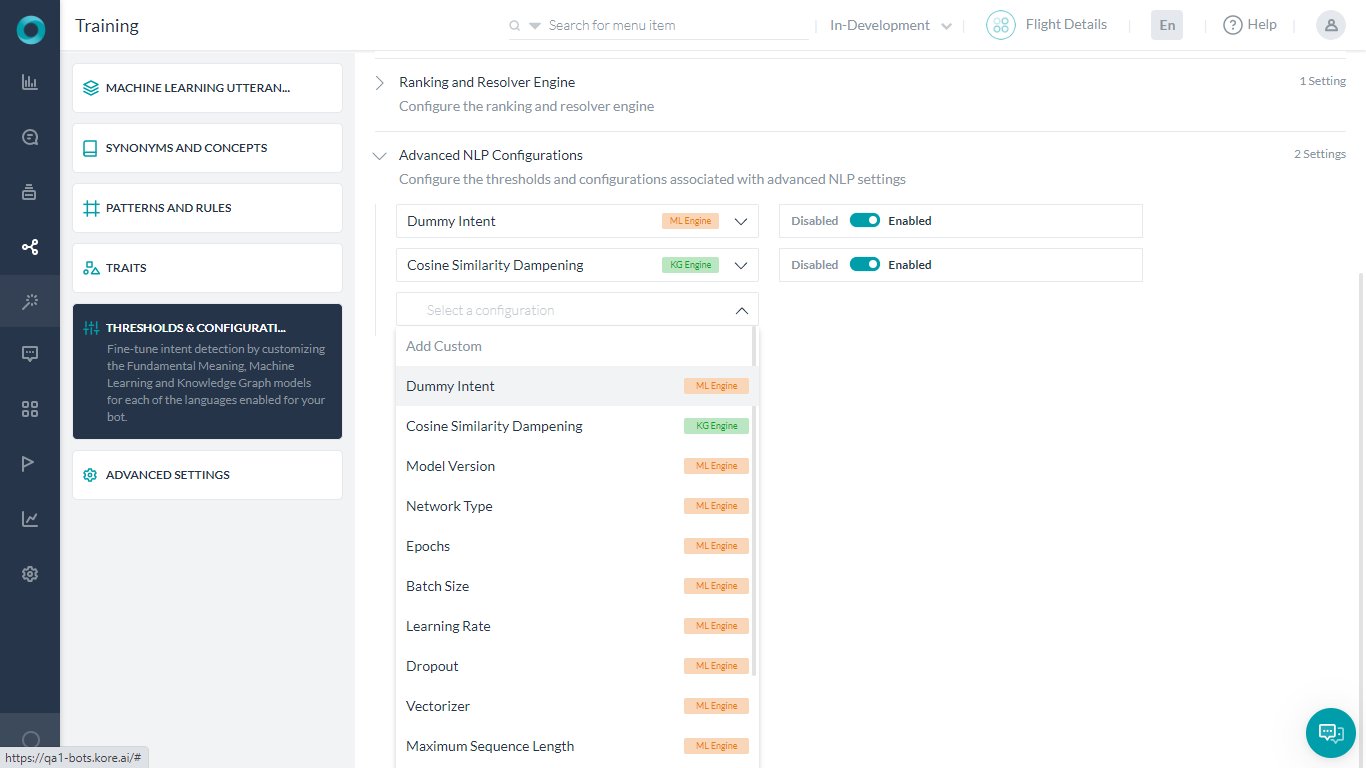

In machine learning, a hyperparameter is a parameter whose value is used to control the learning process. The hyperparameters provide you with additional customization options for your bots. The following are the ML configurations that can be customized.

Network Type

You can choose the Neural Network that you want to use. This setting is moved to the Machine Learning section post v8.1. Refer here for details.

Epochs

In terms of artificial neural networks, an epoch refers to one cycle through the full training dataset. To get a good performance on non-training data, it usually (but not always) takes more than one pass over the training data. The number of epochs is a hyperparameter that controls the number of complete passes through the training dataset.

Batch Size

Batch size is a term used in machine learning and refers to the number of training examples utilized in one iteration. It controls the accuracy of the estimate of the error gradient when training neural networks. The batch size is a hyperparameter that controls the number of training samples to work through before the model’s internal parameters are updated.

Learning Rate

In machine learning and statistics, the learning rate is a tuning parameter in an optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function. It can be thought of as a parameter for controlling the weight update in the neural network based on the loss.

Dropout

The term dropout refers to dropping out units (both hidden and visible) in a neural network. Simply put, dropout refers to ignoring units (i.e. neurons) during the training phase of a certain set of neurons which is chosen at random. It is a regularization technique to prevent overfitting of data.

Vectorizer

Vectorization is a way to optimize algorithms by using vector operations for computations instead of element-by-element operations. It is used to determine the feature extraction technique on training data. It can be set to one of the following:

- Count Vectorizer is used to convert the given text documents to a vector of term/token counts based on the frequency (count) of each word occurrence in the text. This is helpful when there are multiple texts, and each word in the text needs to be converted into vectors for use in further text analysis. It enables the pre-processing of text data prior to generating the vector representation.

- TFIDF Vectorizer is a statistical measure that evaluates how relevant a word is to a document in a collection of documents. This is done by multiplying two metrics: how many times a word appears in a document (Term Frequency TF), and the Inverse Document Frequency (IDF) of the word across a set of documents.

Maximum Sequence Length

When processing a sentence (for training or prediction), the length of the sequence is the number of words in the sentence. The maximum sequence length parameter is the maximum number of words to be considered for training. If the user input or training phrase sentence sequence length is more than the maximum sentence length it is trimmed to this length and if it is less than then the sentence is padded with special tokens.

Embeddings Type

A (word) embedding is a vector representation of a word or phrase in an input/training text. Words with similar meaning will have similar vector representations in n-dimensional space and the vector values are learned in a way that resembles a neural network.

Embeddings Type can be set to one of the following:

- Random (default setting): At first, all the words are assigned random embeddings, then the embeddings are optimized for the given training data while training.

- Generated: Word Embeddings are generated just before the training starts. Word2Vec model is used for generating word embeddings. These generated embeddings are used while training. These generated word embeddings are optimized for the given training data while training.

Embeddings Dimensions

The embedding dimension defines the size of the embedding vector. If the word embeddings are random or generated, any number can be used as an embedding dimension.

K Fold Cross-Validation

Cross-validation is a resampling procedure used to evaluate machine learning models on a limited data sample. The procedure has a single parameter called k that refers to the number of groups that a given data sample is to be split into. This setting allows you to configure the K parameter. Refer here for more on cross-validation.

Fuzzy Match

Fuzzy matching is an approximate string matching technique that helps the system identify non-exact matches. The ML Engine uses fuzzy matching logic to identify definitive matches. The fuzzy match algorithm assigns a Fuzzy Search score to the intents based on their similarity with the user utterance. Any intent with a fuzzy match score of 95 or higher (on a scale of 0-100) is identified as a definitive match.

However, fuzzy matching can produce false positives when there are words with similar spellings but different meanings. For example, possible vs. impossible or available vs. unavailable. This behavior is problematic in some cases. You can disable this option and discourage the ML engine from using this matching algorithm.

Negation Handling

This setting is configured to choose the ML engine’s behavior when negated words are present in the user utterance. When the Negation Handling configuration is enabled, the intent’s ML score would be penalized if there are any negated predilection words present in the user utterance.

Ignore Multiple Occurences

Sometimes the intent identification gets skewed if multiple occurrences of the same word are present in the user utterance. When the Ignore Multiple Occurrences configuration is enabled, then multiple occurrences of the same word present in the user utterance are discarded. The repeated word is considered only once for the vectorization and the subsequent intent matching.

Entity Placeholders in User Utterances

Sometimes you want the system to replace the entity values present in the user utterance with entity placeholders so that the intent detection can be improved. Note that the entities that are not resolved by the NER model would not be used for replacement, so if you enable this option we strongly urge that you annotate all the training utterances. These entities are replaced in user utterance in End-user interactions, Batch testing, Utterance testing, Conversation testing.

Sentence Split

If the user input has multiple sentences, multiple intent calls are made, one for each sentence. This might not be an ideal situation in some cases. For example user utterance, I want to book tickets. Redirect me to Book My Show. will result in a 0.6 ML score for I want to book tickets and Redirect me to Book My Show and the total ML score of 0.6.

Disabling this configuration sends the original user input to ML for intent identification and results in a definite score like 0.99 for the above example.

Multiple Intent Model

Enabling this feature creates multiple ML intent models for your bot. All the Primary Dialog Intents will be part of the Bot Level Intent Model. Separate Dialog Level ML Models are created for each of the other Dialog Tasks and Sub Dialog Tasks, consisting of all the sub-intents used in the respective task definition. Refer here for details.

Neurons in Hidden Layer

Neurons in Hidden Layer determine the intensity/rigor to be adopted while performing intent identification by the ML Model. A higher number of neurons increases the accuracy but would require a longer duration for completing the training. A lower number of neurons decreases the accuracy but would speed up the training time. By default, it is fixed as 1000. Ideally, it should be 1x times the number of intents in a bot and can go up to 2x for better accuracy. This is a general recommendation and would vary depending on the quality of the training

Softmax Temperature

Softmax temperature allows you to define how confidently the ML Engine should identify the winning intent from the ML Model. Temperature is a hyperparameter that is applied to logits (Model outputs) to affect the final probabilities from the softmax. Any value between 0 to 1 indicates that the ML Engine should identify the winning intent with lower confidence. 0 being very low confidence and 1 being regular confidence. Any value between 1 to 100 indicates that the ML Engine should associate a high amount of confidence for the winning intent. 1 being regular confidence and 100 being the higher confidence possible.

Spell Correction in ML

For bots in the English language, spell correction does not happen on the ML bot dictionary. This might cause an issue for bots that are heavily dependent on ML training. The issue can be rectified by enabling spell correction on the ML bot dictionary while predicting. This is achieved by adding custom config in NLP Advanced Settings.

This is a Custom configuration, to enable follow these steps:

- Add Custom

- Enter name as ML_spell_correction

- Enter the value as enabled or disabled