Knowledge Graph

For executable tasks, the intent is identified based on either the task name (used in the Fundamental Meaning model) or machine learning utterances defined for a task. This approach is appropriate when a task can be distinctively identified from other tasks, using language semantics, and statistical probabilities derived from the machine learning model.

In the case of FAQs, this approach may not fare well as most of the FAQs are similar to each other in terms of semantic variation, and will require additional intelligence about the domain to find a more appropriate answer.

Kore.ai’s Knowledge Graph-based model provides that additional intelligence required to represent the importance of key domain terms and their relationships in identifying the user’s intent (in this case the most appropriate question).

We will use the following two examples to explain the different configurations required to build a Knowledge graph.

| Example A |

Example B |

|

Consider a bot trained with the following questions:

- A1: How to apply for a loan?

- A2: What is the process to apply for insurance?

|

Consider a bot trained with the following questions:

- B1: Can I open a joint account?

- B2: How do I add a joint account holder?

- B3: How can I apply for a checkbook?

- B4: How do I apply for a debit card?

|

The following are a few challenges with intent recognition using a typical model based on pure machine learning and semantic rules:

- Results obtained from machine learning-based models have a tendency to produce a false positive result if the user utterance has more matching terms with the irrelevant question.

- The model fails when the bot needs to comprehend based on domain terms and relationships. For example, the user utterance What is the process to apply for a loan? will incorrectly fetch A2 as a preferred match instead of A1. As A2 has more terms matching with user utterance than A1.

- This model fails to fetch the correct response if part of a question is stated in a connection with another question. Example, A: the user utterance I have applied for a loan, can I get insurance results in the ambiguity between A1 & A2. Example B: User utterance I have opened a joint account, can I have a debit card will incorrectly match B1 over B4.

In the Kore knowledge graph model, having all the questions at the root level is equivalent to using a model based on term frequency and semantic rules.

Key Domain Terms and their Relationship

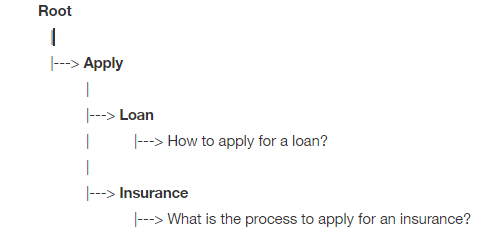

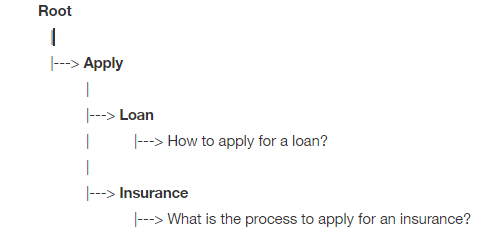

Identifying key terms and their relationships is an important aspect of building ontology. Let us understand this using our sample Example A. Both A1 and A2 are about an application procedure, one talks about applying for a loan while the other talks about applying for insurance. So while creating an ontology, we can create a parent node apply with two child nodes as loan and insurance. Then A1 and A2 can be assigned as child nodes of loan and insurance node respectively.

Representation of Knowledge Graph

Example A

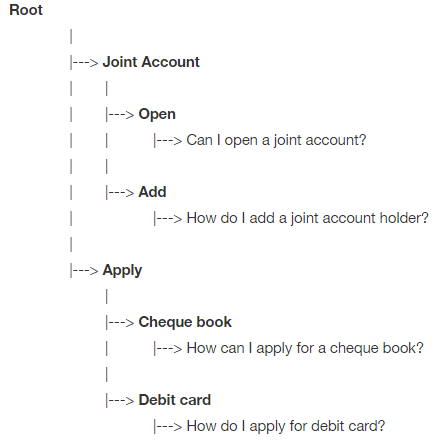

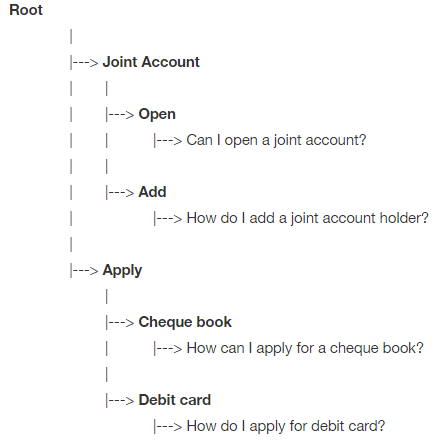

Similarly, in the case of Example B, the graph will be as below

Capabilities of a Graph Engine

- Ease of Training using Synonyms: Kore.ai’s Knowledge Graph has a provision to associate synonyms against a graph node. This helps capture the variation in a question. For example, In Example A above, a user can put get as a synonym to apply.

- Better Coverage with Alternate Questions: Knowledge Graph has a provision to add alternate questions. This helps us to capture the various ways a user might ask the same question. In Example B above, for the question How do I add a joint account holder we can add an alternate question as Can I add my wife as a joint account holder.

- Improved Accuracy: Ontology-driven question-answers reduce the possibility of false positives. For example, for the user utterance What is the process to apply for SSN? a term frequency-based model will incorrectly suggest A2 as a match. An ontology-driven model has the capability to prevent such false positives.

- Weighing Phrases using Classes: Kore.ai’s graph engine has introduced a concept of classes for filtering out irrelevant suggestions. See the below section on classes for a detailed explanation

- Ability to Mark Term Importance: Kore.ai’s graph engine has a provision to mark an ontology term is important. For example, in the question, How to apply for a loan, loan is an important term. If a loan keyword is not present in the user utterance, then it makes little sense to fetch A1. Whereas in term frequency-based model a user utterance How to apply for a will incorrectly fetch A1.

- Ability to Group Relevant Nodes: As the graph grows in size, managing graph nodes can become a challenging task. Using the organizer node construct of the ontology engine, the bot developer can group relevant nodes under a node.

Knowledge Graph Traits

Note: Traits replace Classes from v6.4 and before.

When using trait ensure you use it judicially as over usage may result in false negatives. When using classes ensure:

- Good coverage of classes.

- Classes should not get generalized improperly.

- All the FAQs get tagged to mutually exclusive classes.

Following is the example of how classes work: Let’s say we create a class called Request and add request related phrases to it. If the user says I would like to get WebEx and I would like to get is trained for the Request class, this FAQ is only considered across the Knowledge Graph paths where the word request is tagged with. This is a positive scenario. But if the user says Can you help with getting WebEx?, and we did not have similar utterances trained for the Request class, it gets tagged with None class, and this FAQ is only used with the paths where the word request isn’t present. This results in a failure.

Another possibility is that if the user says I want to request help fixing WebEx and we have trained the Request class with some utterances having I want to request, and based on the training provided across all classes, the engine may generalize and tag this feature (phrase containingIwant to request ) to the Request class. In this case, if the Request class is not present in the help path for WebEx, this results in failure of identifying the input against help > WebEx.

The cases where classes are useful is when we have a mutually exclusive set of FAQs. For example, if we have a set of FAQs for Product issues and also a set of FAQs for the Process of buying a product.

FAQs for Process for buying a product:

- How do I buy Microsoft Office online?

- What is the process for buying anti-virus software?

FAQs for Product Issues:

- I am having issues installing software

- How to resolve an issue with antivirus

When a user says What is the process for fixing antivirus when it doesn’t work?, the engine may find that this input is similar to both A2 and B2, and may present both of them as suggestions. We know that Issue is mutually exclusive to buy, and it does not make sense present buy related FAQs at all, in this case. The opposite (matching Issue FAQs for buy related question) may be a much bigger problem. To solve this, we will create two classes, one for type issues and another for buy. Every input is classified into either buy or issue and only the relevant questions will be used in finding an appropriate answer.